How is tech revolutionizing weather forecasting?

The Evolution of Tech in Weather Forecasting

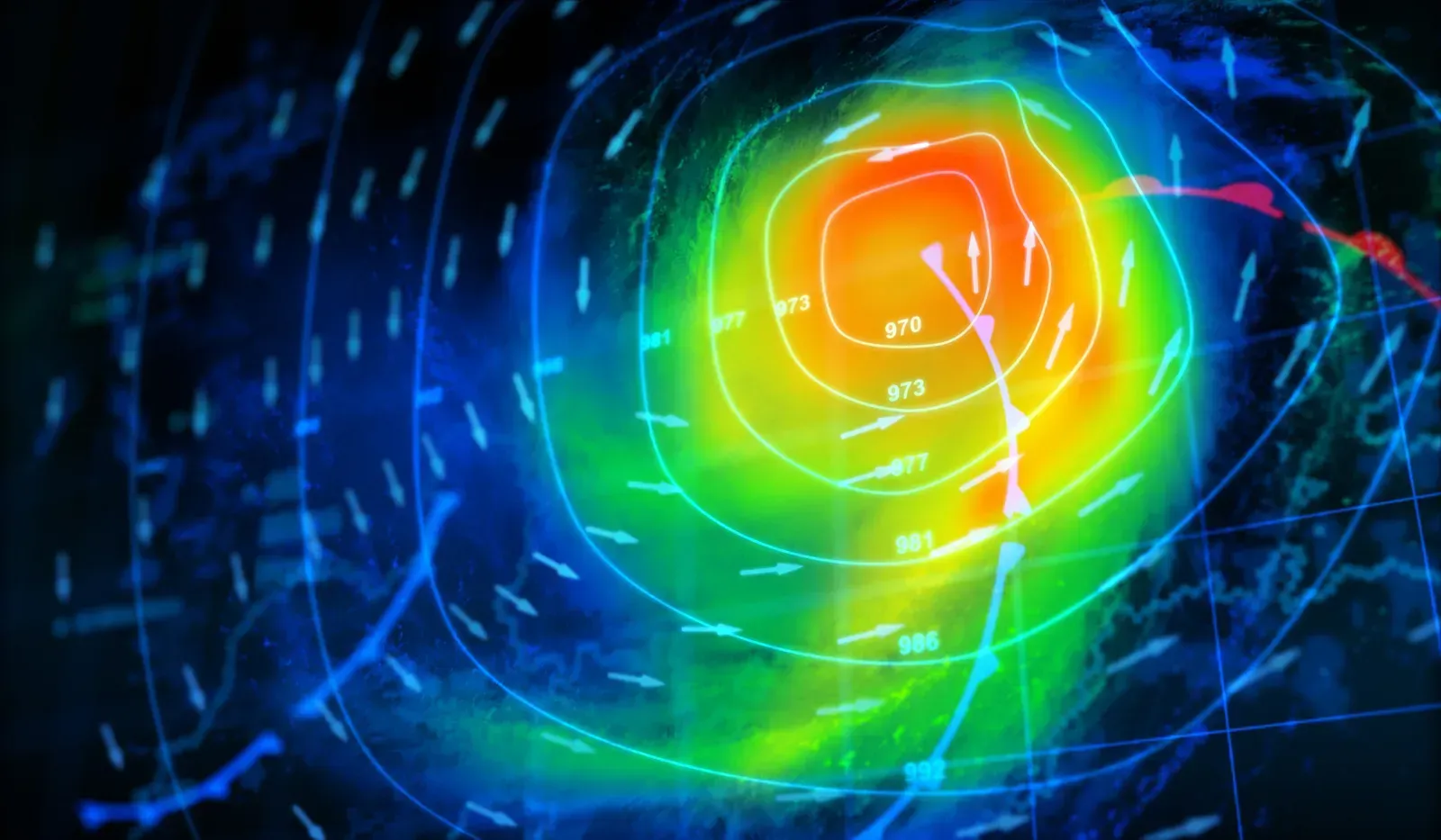

Traditional weather forecasting relied on numerical weather prediction (NWP) models, which use complex mathematical equations to simulate atmospheric behavior. While effective, these models are computationally intensive and struggle with chaotic weather systems. AI has introduced a data-driven paradigm, analyzing massive datasets from diverse sources to uncover patterns that traditional models miss. Since the early 2010s, advancements in computing power and data availability have fueled AI’s rise in meteorology, making forecasts more accurate and accessible.

By 2025, AI models like Google DeepMind’s GraphCast, NVIDIA’s FourCastNet, and Huawei’s Pangu-Weather have surpassed conventional systems in speed and precision. These innovations are transforming how we predict daily weather and catastrophic events, offering solutions for industries, governments, and individuals searching for “AI weather forecasting for extreme events” or “real-time weather prediction technology.”

How AI in Weather Forecasting Works

AI’s ability to process vast datasets from satellites, radar, and historical weather records enables highly accurate and rapid predictions. Unlike traditional NWP models, which require hours of supercomputer processing, AI models deliver forecasts in seconds, addressing “AI-driven global weather forecasting.”

- Google DeepMind’s GraphCast: Launched in 2023, GraphCast uses graph neural networks to generate 10-day global forecasts in under a minute. It outperforms the European Centre for Medium-Range Weather Forecasts (ECMWF) in over 90% of metrics, excelling in predicting temperature, wind speed, and humidity. Its efficiency is critical for “AI weather models for rapid forecasting.”

- NVIDIA’s FourCastNet: Utilizing Fourier neural operators, FourCastNet produces high-resolution forecasts with up to 20% fewer errors in storm track predictions compared to NWP models. Its speed supports real-time updates during extreme events, aligning with “AI weather forecasting for storm tracking.”

- Huawei’s Pangu-Weather: This model leverages 3D neural networks to analyze atmospheric data, offering competitive accuracy with lower computational costs. It’s particularly valuable for regions with limited supercomputing resources, supporting “cost-effective AI weather forecasting solutions.”

Hyper-Local and Nowcasting Capabilities

AI enables hyper-local weather forecasting, providing predictions at the neighborhood or street level. This is vital for urban planning, agriculture, and public safety.

The Future of Weather Forecasting is in AI

Overreliance on AI risks sidelining human expertise, as meteorologists are essential for interpreting complex scenarios. Investment in physical infrastructure, like weather stations in developing nations, remains critical, addressing “balanced AI and human weather forecasting.” The dominance of tech giants like Google and IBM raises concerns about commercialization, emphasizing the need for public institutions to retain influence in “AI weather forecasting for public good.”